Connect the dots not collect the dots

Connect the dots, not collect the dots. How can we maximize the real value of a meeting? By maximizing how participants “connect the dots”—what they actually learn from their experiences at the meeting—rather than documenting what we or they think they should have learned.

Seth Godin makes the same point when talking about the future of education and what we can do about it in his 2012 TEDxYouth@BFS talk. Watch this 30-second clip.

Video clip transcript: “Are we asking our kids to collect dots or connect dots? Because we’re really good at measuring how many dots they collect, how many facts they have memorized, how many boxes they have filled in, but we teach nothing about how to connect those dots. You cannot teach connecting dots in a Dummies manual. You can only do it by putting kids into a situation where they can fail.”

—Seth Godin at TEDxYouth@BFS in the Youtube video STOP STEALING DREAMS

Stop collecting dots

Too many meetings continue to use short-term superficial evaluations as evidence for the efficacy of the event. We know that these kinds of meeting evaluations are unreliable (1, 2, 3). Luckily there are better ways to find out whether participants have learned to better connect the dots. Here are four of them:

- The Reminder.

- Ask the right questions.

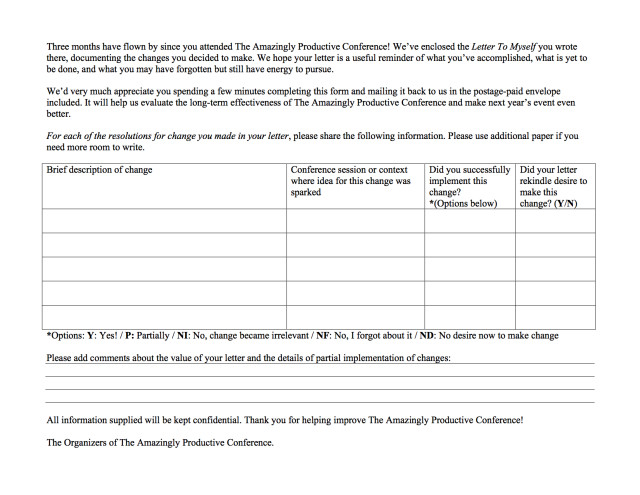

- A Letter to Myself.

- Research KPIs and obtain Net Promoter scores.

Instead, design meetings to connect the dots

Here are five suggestions:

- Build your event around conversation rather than content.

- Maximize social connection by making it an integral component of every session.

- Give people permission to connect.

- Design sessions where the audience can’t stop talking about what they did.

- Give attendees experiences, not things.

Finally, an example of what can happen when you design meetings with these ideas in mind: Linda’s very different experiences at TradConf and PartConf.

P.S.

Today, Seth Godin posted this:

“Hardy came home from school and proudly showed his mom the cheap plastic trinkets he had earned that day.

‘I stood quietly on the dot and so I got some tickets. And if I stand on the dot quietly tomorrow, I can get some more prizes!'”

…

“Is standing on a dot the thing we need to train kids to do? Has each of us spent too much time standing on dots already?”

—On the dot, Seth Godin

A

A

One of the easiest, yet often neglected, ways for meeting professionals to improve their craft is to obtain (and act on!) client feedback after designing/producing/facilitating an event. So, I like to schedule a thirty-minute call at a mutually convenient date one or two weeks after the event, giving the client time to decompress and process attendee conference evaluations.

One of the easiest, yet often neglected, ways for meeting professionals to improve their craft is to obtain (and act on!) client feedback after designing/producing/facilitating an event. So, I like to schedule a thirty-minute call at a mutually convenient date one or two weeks after the event, giving the client time to decompress and process attendee conference evaluations. Let’s take a hard look at conference evaluations.

Let’s take a hard look at conference evaluations.

Traditional meeting evaluations are unreliable. We obtain them within a few days of the session experience. All such short-term evaluations of a meeting or conference session possess a fatal flaw. They tell you nothing about the long-term effects of the session.

Traditional meeting evaluations are unreliable. We obtain them within a few days of the session experience. All such short-term evaluations of a meeting or conference session possess a fatal flaw. They tell you nothing about the long-term effects of the session.