ChatGPT is just a tool

ChatGPT is a tool called a large language model (LLM). However, marketers love to call ChatGPT and other LLMs artificial intelligence (AI) because this framing ties into our culture’s fascination with understanding who we are, how we’re able to do what we do, and whether we are unique in our abilities or not. This fascination causes us to give human names to familiar objects, like cars, and to anthropomorphize our pets and tools. Harnessing fascination is a good way to sell us stuff.

Artificial intelligence?

I’ve programmed computers for over half a century and taught college computer science from 1983 – 93. Computer researchers began to use the term artificial intelligence in the 1940s and 50s.

In 1993, JET Thomas and I conducted a seminar at Marlboro College on AI. At that time, a second AI winter held sway. Rule and frame-based systems predominated. Researchers had not implemented key components of effective neural networks, our current core AI technology. The best chess-playing programs could beat most players, but Grandmaster Gary Kasparov wouldn’t lose a chess match to a machine until 1997. During the seminar, teachers and students alike shared a fascination with the concept of AI. However, the technology of the time generated output that was completely determined by the rules built into its programs.

However, unlike the software available during our 1993 seminar, humans can’t determine how today’s LLMs come up with their responses. And these sophisticated neural networks, often called deep learning, are everywhere. We use them for speech and image recognition, and natural language processing. ChatGPT has been used to “write and debug computer programs, compose music, teleplays, fairy tales and student essays, answer test questions (sometimes, depending on the test, at a level above the average human test-taker), generate business ideas, write poetry and song lyrics, translate and summarize text, emulate a Linux system, simulate entire chat rooms, play games like tic-tac-toe, or simulate an ATM.”

Just because ChatGPT can be asked to do these things doesn’t guarantee that it creates reliable answers. The software’s creator, OpenAI, acknowledges that ChatGPT “sometimes writes plausible-sounding but incorrect or nonsensical answers”.

Here’s a simple example of the limitations and unreliability of ChatGPT.

The limitations and unreliability of ChatGPT

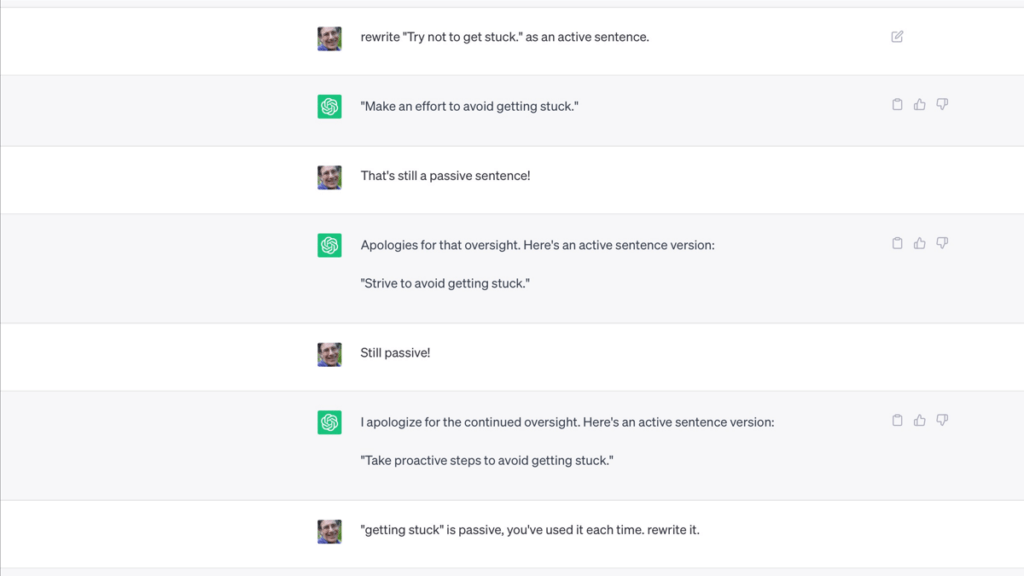

I asked ChatGPT to rewrite a passive voice sentence in an active voice.

Me: rewrite “Try not to get stuck.” as an active sentence.

ChatGPT: ”Make an effort to avoid getting stuck.”

Me: That’s still a passive sentence!

ChatGPT: Apologies for that oversight. Here’s an active sentence version:

“Strive to avoid getting stuck.”

Me: Still passive!

ChatGPT: I apologize for the continued oversight. Here’s an active sentence version:

“Take proactive steps to avoid getting stuck.”

Me: “getting stuck” is passive, you’ve used it each time. rewrite it.

ChatGPT: Apologies once again for the oversight. Here’s an active sentence with a revised phrase:

“Make an effort to prevent becoming trapped.”

Me: “becoming trapped” is passive too. You’ve failed.

ChatGPT: Apologies for the repeated oversight. Here’s an active sentence with an active phrase:

“Exert effort to evade getting trapped.”

Me: Grammar is not your strong point.

ChatGPT: I apologize for the errors in my previous responses. I appreciate your patience, and I’ll make sure to provide a correct and active sentence for you. Here’s an active version of the sentence:

“Strive to avoid getting stuck.”

<Sigh>.

An automated mansplaining machine

This and numerous other examples of the confident fabrications of ChatGPT have led many to characterize it—fairly in my view—as “an automated mansplaining machine“, “autocomplete on steroids“, and a “bias optimizer“. As the third reference points out, “ChatGPT cannot recontextualize or independently seek out new information that contradicts [its] built-in assumptions.”

In the above example, it would have been refreshing if ChatGPT said at some point, “Sorry, I don’t know how to do that.” Instead, it repeatedly declared it had the answer. Hence the danger of using this tool. You have no guarantee anything ChatGPT avows is actually true! How can we use something we can’t completely trust?

The answer is: Carefully!

ChatGPT and the future of work

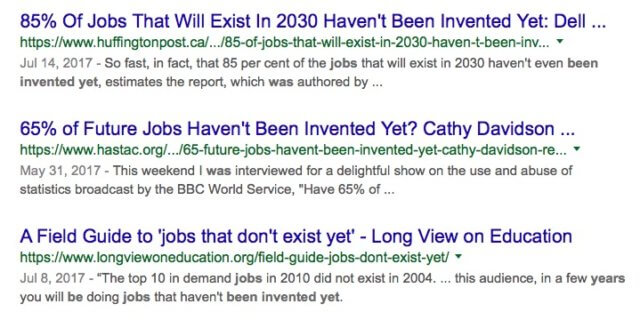

In 2019, I wrote about my concerns about the impact of machine learning on the future of work. Recently, people have written thousands of articles about how LLMs like ChatGPT jeopardize the future work prospects of numerous professions.

Some bosses will undoubtedly believe that they can replace some employees with fewer people writing well-prompted responses from ChatGPT. Such beliefs are dangerously naive. Here are three examples of the problems that can arise:

- The lawyer who got in trouble when he relied on fake case law invented by ChatGPT;

- A media company had to make major corrections to an article written by ChatGPT due to multiple inaccuracies; and

- “If you ask ChatGPT to deliver a complete software application, it will fail.”

All new technology transforms job scope over time. But only some, like the automobile, eliminate industries. ChatGPT, by making some forms of work more efficient may allow employees to be more productive, meaning a static industry may be able to employ fewer workers. But I don’t see the wholesale future elimination of lawyers, writers, software makers, and other professions that many predict.

ChatGPT is just a tool

My advice is to think of ChatGPT as just a tool. Knowing when and how to use a specific tool is important. For example, as I write this post I’m using Grammarly to check my spelling and grammar. It’s a useful tool that catches most of my errors on the fly and occasionally improves how I express myself. However, it often makes suggestions that are simply incorrect and need to be suppressed. If I relied on Grammarly 100% to proofread my work, errors would slip through. (Regardless, I’m not claiming I’m a perfect editor of my own work!)

Similarly, I find ChatGPT useful for suggesting possible blog post titles, summarizing articles, and brainstorming ways to express ideas in print. However, most of the LLM’s suggestions are not helpful to me; I always need to review them to determine if there’s anything worth using.

In conclusion, treat ChatGPT as a useful but fallible tool, rather than an oracle. And don’t worry too much about its impact on your future work prospects!