Combining facilitation tools

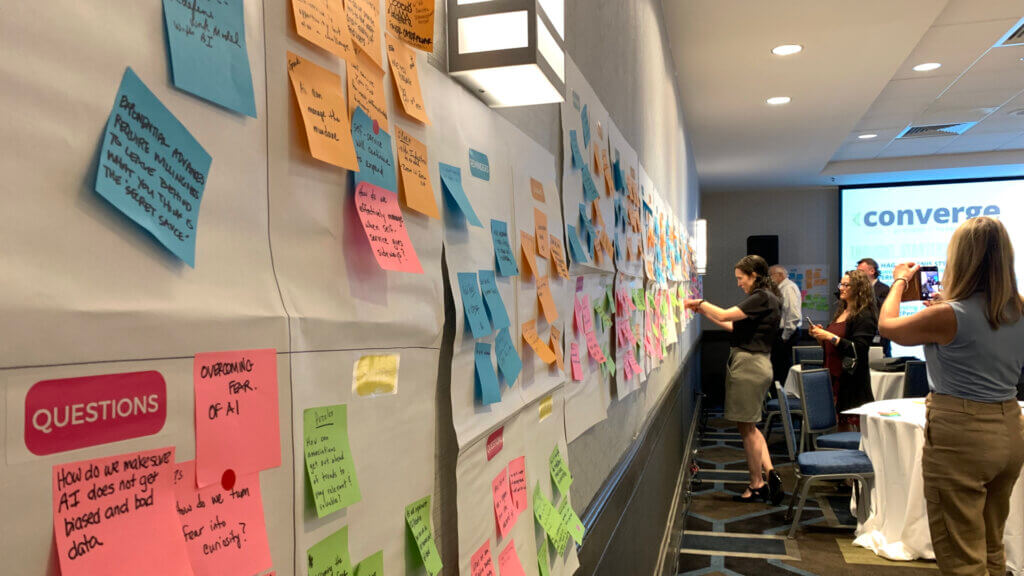

My 2014 post on RSQP gives a clear example of how it works (and my book Event Crowdsourcing includes full details) so I won’t repeat myself here. The 2014 and the recent conference each had around 200 participants, so the process and timing (around 25 minutes) were pretty similar.

But there were two significant differences.

Two significant differences

1. Conference length

The 2014 conference ran for three days.

But the 2023 conference ran a mere eight hours, from 8:30 AM – 4:30 PM on a single day.

2. How we used the gallery created by RSQP

The 2014 conference didn’t use the RSQP gallery to directly influence what would happen during the rest of the conference. A small group of subject-matter experts clustered key theme notes into a valuable public resource for review throughout the event. Participants simply used the clustered gallery to discover what their peers were thinking.

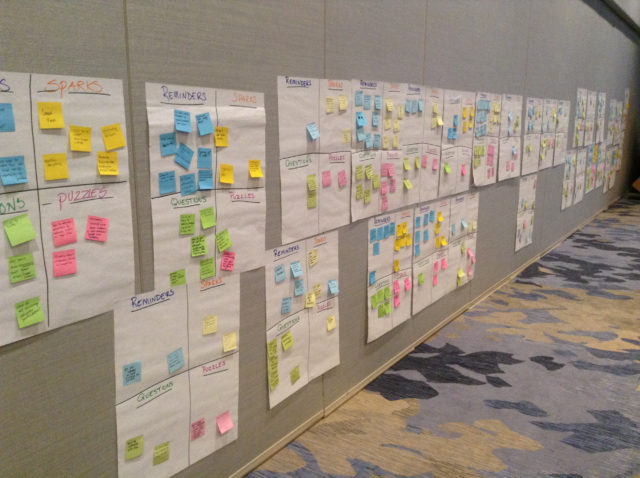

In contrast, I designed the 2023 conference to explore the future of a 50-year-old industry, and we needed to use the information mined by RSQP to create same-day sessions that reflected participants’ top-of-mind issues, questions, and concerns. We had just 2½ hours to:

- review the information on nearly a thousand sticky notes;

- determine an optimum set of sessions to run;

- find facilitators for the sessions; and

- schedule the sessions to time slots and rooms.

Combining facilitation tools

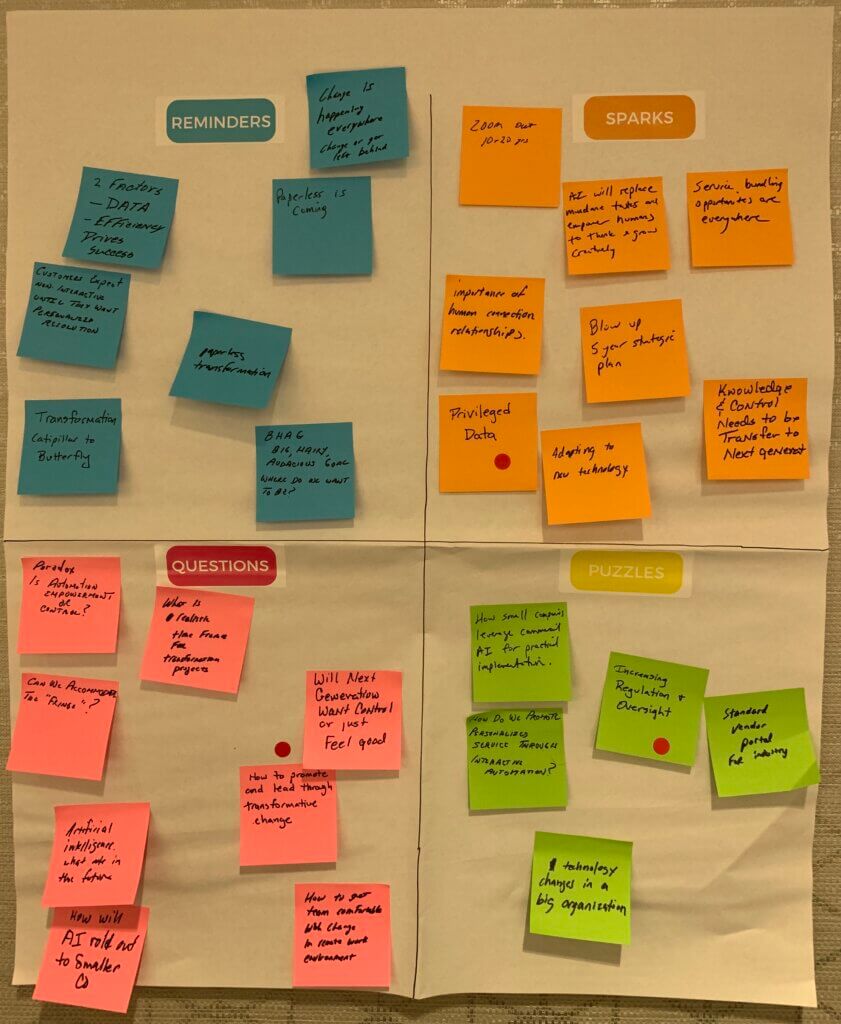

So, at the end of the standard RSQP process, I added a dot voting step. While the participants individually shared their ideas with the others at their table, the staff gave each table a strip of three red sticky dots. When the flip chart sheets were complete, I asked each table to spend three minutes choosing and adding red dots to the three topics on their sheet they thought were the most important for further discussion. Here’s an example of one table’s work.

An initial review of the gallery’s red-dot items, allowed us to quickly zero in on needed and wanted topics. We saw a nice combination of popular ideas and great individual table suggestions. Being able to initially focus on red-dot topics on the flip charts saved us crucial time.

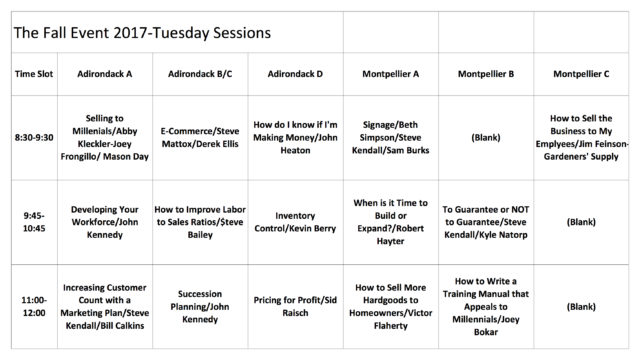

As a result, we determined the topics, assigned facilitators, and scheduled a set of nine sessions in time to announce them during lunch. (Once again, refer to my book Event Crowdsourcing for the step-by-step procedures we used for session selection and scheduling.) We ran the sessions in two one-hour afternoon time slots, and, as is invariably the case with program crowdsourcing, every session was well-attended and received great reviews.

Conclusion

I’m sure there are still great group facilitation techniques I have yet to discover. But my facilitation toolbox doesn’t get as many new tools added each year as when I began to practice professionally. However, when I consider how many possible combinations of my existing tools are available to solve new group work situations, I feel increasingly confident in my ability to handle novel facilitation challenges that may arise.

As my mentor Jerry Weinberg wrote:

“I may run out of ideas, but I’ll never run out of new combinations of ideas.”

—Jerry Weinberg, Weinberg on Writing: The Fieldstone Method

How do we build conference programs that attendees actually want and need? Since 1992 I’ve experimented with multiple methods to ensure that every session is relevant and valuable. Here’s what happened when I incorporated dot voting into a recent two-day association peer conference.

How do we build conference programs that attendees actually want and need? Since 1992 I’ve experimented with multiple methods to ensure that every session is relevant and valuable. Here’s what happened when I incorporated dot voting into a recent two-day association peer conference.

Here’s a 22-second video excerpt of the dot voting, which was open for 35 minutes during an evening reception.

Here’s a 22-second video excerpt of the dot voting, which was open for 35 minutes during an evening reception.

In

In