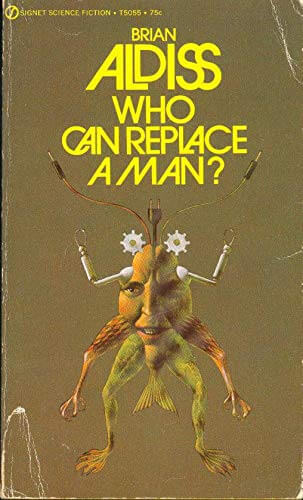

In June 1958, science fiction writer Brian W. Aldiss published “But Who Can Replace a Man?” As a teenager, I discovered this thought-provoking short story while browsing the sci-fi shelves of my local library.

Like much science fiction, Aldiss’s tale explores humanity’s fraught relationship with technology in a dystopian future. The story depicts a world where humans are largely extinct, leaving machines with varying levels of intelligence to maintain society. When they discover humanity is gone, a group of increasingly dysfunctional machines tries to determine their purpose. You can read it here.

But_Who_Can_Replace_A_Man

(Thank you, Wayback Machine!)

Can Generative AI Replace a Man?

It’s no coincidence that this story has come to mind recently. Written over half a century ago, Aldiss’s satirical exploration of intelligence, hierarchy, and purpose eerily anticipates the rise of generative AI systems like ChatGPT.

The field-minder, seed distributor, radio operator, and other machines interact through rigid hierarchies and limited autonomy, leading to absurd conflicts, poor decisions, and an inability to work together effectively. Despite their artificial intelligence, their inability to adapt or cooperate effectively without humans underscores their limitations.

Large Language Models (LLMs) like ChatGPT demonstrate what looks like intelligence by generating human-like responses, yet lack comprehension, intentions, or ethical grounding. Like the machines in Aldiss’s story, such systems can often do well within certain boundaries. But ultimately they do not “understand” nuanced or value-driven concepts.

Aldiss critiques both the risks of delegating control to artificial systems and the hubris of assuming machines can entirely replace humans. His work remains a cautionary allegory, particularly relevant as we confront the implications of artificial general intelligence (AGI).

What can we learn from Aldiss’s story?

Over-Reliance Without Oversight: The machines’ dysfunction highlights how systems can falter without clear human guidance. Similarly, generative AI systems require careful oversight to align with human values and goals.

Hierarchical and Narrow Programming: Rigid hierarchies and predefined tasks limit the machines, much like how generative AI today struggles to adapt ethically or contextually outside its training.

Purpose and Alignment: Aldiss’s machines lack purpose without humans in the loop. Similarly, AGI systems need explicit alignment mechanisms to prevent unintended consequences.

Ethical and Social Implications: The story critiques the blind replacement of human labor and decision-making with machines, cautioning against losing sight of human agency and responsibility during technological advancement.

Balancing Innovation with Ethics

Today’s LLMs may not yet be autonomous, but they already challenge the balance between augmenting human capabilities and outright replacement. Aldiss’s story reminds us that technological advancement must go hand-in-hand with ethical safeguards and critical oversight. It’s a lesson we must heed as generative AI shapes the future.