Two ways to take a hard look at conference evaluations

First, ask yourself the following about every question you ask:

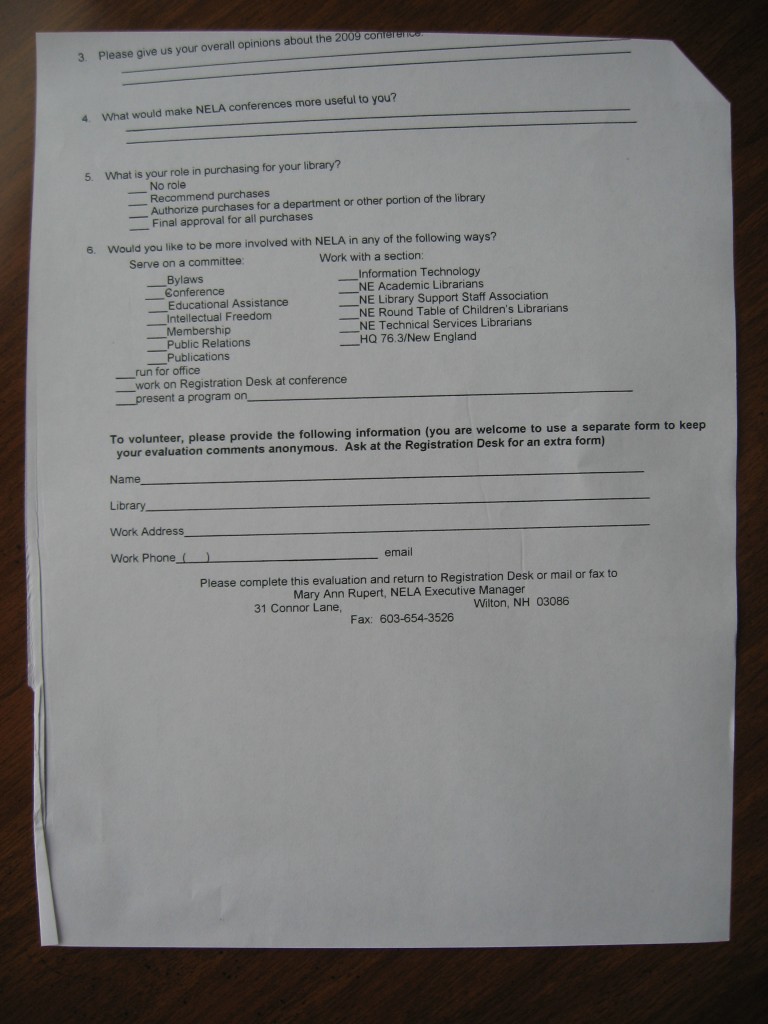

Are you asking questions capable of making change happen?

After the survey is over, can you say to the bosses, “83% of our customer base agrees with answer A, which means we should change our policy on this issue.”It feels like it’s cheap to add one more question, easy to make the question a bit banal, simple to cover one more issue. But, if the answers aren’t going to make a difference internally, what is the question for?

—Seth Godin

In other words, if any question you ask doesn’t have the potential to lead you to change anything, leave it out!)

Second, think about Seth’s sobering experience when responding to “Any other comments?” style questions:

Here’s a simple test I do, something that has never once led to action: In the last question of a sloppy, census-style customer service survey, when they ask, “anything else?” I put my name and phone number and ask them to call me. They haven’t, never once, not in more than fifty brand experiences.

Gulp. Would your evaluation process fare any better? As Seth concludes:

If you’re not going to read the answers and take action, why are you asking?

Take a hard look at your conference evaluations. You may be surprised by what you find.